The connectivity model refers to the network access and permissions that allow your Kubernetes cluster and event broker services to function correctly. The types of connectivity you need to consider in your deployment are:

- Messaging Connectivity: The connectivity required for messaging traffic (between event broker services and from applications to event broker services).

- Management Connectivity: The connectivity required for you to administer your event broker services using the Solace CLI, Broker Manager, or SEMP.

- Operational Connectivity: The connectivity required to set up your Kubernetes cluster and launch event broker services.

For more information, see Understanding the Types of Connectivity to Configure.

Configuring the external connectivity is important. Incorrect configuration causes issues when installing the Mission Control Agent later. Ensure that you review and implement the configuration changes required.

In environments that restrict access to the public Internet, you must configure the external connectivity for the workloads in the Kubernetes cluster and we recommend you consider configuring the broker connectivity as well at the same time. The steps are:

-

Configure the hosts, IP addresses, and ports to allow access in environments where external connectivity is required for workloads in the cluster. For a complete list, see Connection Requirements for SAP Integration Suite, Advanced Event Mesh Components .

Networks that restrict public Internet often use an HTTP proxy, but you can explicitly choose to use an allow list. For more information, see Options When External Connectivity Is Limited.

-

Provide access to the Solace Container Registry, in either

gcr.ioor in the Azure China registry. If you require access to the Azure China registry, contact SAP. Access to the Solace Container Registry is required for deployment. If direct access to the Solace Container Registry is not possible, you can proxy it via your own container registry. SAP will configure the Mission Control Agent to use your proxy registry. Credentials for the Solace Container Registry can be obtained through the Cloud Console, see Downloading the Registry Credentials for the Solace Container Registry. - Also, consider configuring the ports required for the messaging connectivity. For more information, see Messaging Connectivity for Outbound Connections and Client Applications.

- We recommend that you use a LoadBalancer, but other connectivity options are available such as NodePort and External IP. If static IPs for outbound connections from event broker services are required, use a NAT. For more information, see Exposing Event Broker Services to External Traffic.

After you have completed the necessary connectivity configuration and installed your Kubernetes cluster, you are ready to move to the next step.

Understanding the Types of Connectivity to Configure

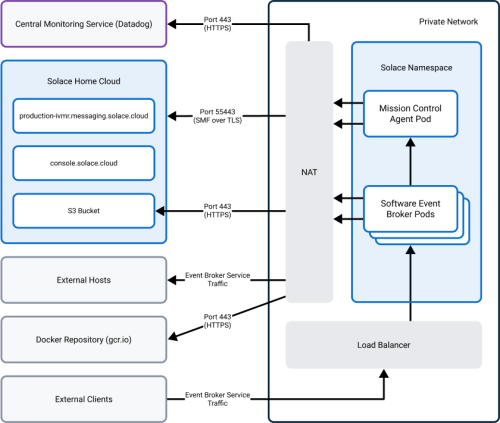

The following diagram shows the correct configuration of operational traffic (external connectivity of workloads from the cluster) and messaging traffic (event broker outbound connections and client application connectivity to the event broker services) that need to flow in your data center. Management traffic (SEMP, Solace CLI, and Broker Manager) is not shown, but could follow the path labeled "Event Broker Service Traffic."

Advanced event mesh must be configured to support the following:

- Operational Connectivity

- This traffic carries communication required for proper functioning of the Kubernetes cluster and permits components of advanced event mesh to install, operate, and monitor event broker services). The installation of the Mission Control Agent requires this connectivity for communication with Home Cloud.

- For more information, see Operational Connectivity.

- Management Connectivity

- Management connectivity enables the transmission of commands to directly administer event broker services, for example, to configure queues, subscriptions, client profiles, and other settings. These commands can be sent over SSH to the Solace CLI, from a browser to Broker Manager, and from applications to the event broker services using SEMP. Management traffic requires that the correct ports be enabled. This management connectivity is required for the components that are external to the Kubernetes cluster.

- Messaging Connectivity

- Messaging traffic refers to the transmission of events and messages between different event broker services and between applications and event broker services. It also includes outbound connections initiated by the event broker services, for example, REST Delivery Points (RDPs). SAP recommends that you configure the messaging traffic for your event broker services at the time you request the IP addresses and ports required for the workloads in your Kubernetes cluster.

- You can specify the client ports used to connect to event broker services and any ports for outbound connections initiated by the event broker services to your VPC/VNet. For more information, see Messaging Connectivity for Outbound Connections and Client Applications.

For security details about the Mission Control Agent, see Mission Control Agent.

For details about the specific connections required by various components, see Connection Requirements for SAP Integration Suite, Advanced Event Mesh Components .

For more information about the default event broker service port configurations, see Load Balancer Rules Per Service (Default Protocols and Port Configuration).

Operational Connectivity

You must configure your Kubernetes cluster to allow the required control traffic to deploy advanced event mesh.

The connection details are required to install the Mission Control Agent (for the management of event broker services and connecting to the Home Cloud) and to monitor the event broker services (via Datadog). For a complete summary of the hosts and ports, see Connection Requirements for SAP Integration Suite, Advanced Event Mesh Components . For more information about Datadog, see Connectivity for the Monitoring of Event Broker Services.

Options When External Connectivity Is Limited

If your networking policies for your VPC/VNet prevent connectivity to the public Internet, SAP recommends that you configure your Kubernetes cluster and network using one of the following options:

- HTTP Proxy Server and Open Ports for Home Cloud

- Use an HTTP proxy server and explicitly allow the required IP addresses and ports of Home Cloud. In this case, you (the customer) must also provide details (URL, username, and password) of the HTTP/HTTPS proxy server to SAP when you're ready to install the Mission Control Agent. You must explicitly allow the IP addresses and ports for the Home Cloud because it uses Solace Message Format (SMF) as the protocol–not HTTP/HTTPS. For information, see Considerations When Using a Proxy for Operational Connectivity between Mission Control Agent and Home Cloud.

- Only Open Ports Required For advanced event mesh:

- Explicitly allow only the IP addresses and ports as described in Connection Requirements for SAP Integration Suite, Advanced Event Mesh Components .

Connection Requirements for SAP Integration Suite, Advanced Event Mesh Components

You must configure the connectivity for the Kubernetes cluster to allow the deployment of advanced event mesh components. This operational traffic is required to install the Mission Control Agent (for the management of event broker services and connecting to the Home Cloud), to monitor event broker services (Datadog), and to retrieve container images from Solace's Container Registry (gcr.io).

The following connection details are required for Kubernetes deployments, such as Azure Kubernetes Service (AKS), Google Kubernetes Engine for Google Cloud (GKE), and Amazon Elastic Kubernetes Service (EKS). These connections are required for the Operational Connectivity when you deploy advanced event mesh to Customer-Controlled Regions.

For more information about the security architecture for Customer-Controlled Regions, see Deployment Architecture for Kubernetes and Security Architecture for Customer-Controlled Regions.

| Connection | Host | IP Addresses | Port | Description |

|---|---|---|---|---|

|

Mission Control Agent to Home Cloud |

Regional Site for United States: production-ivmr.messaging.solace.cloud |

Regional Site for United States:

|

55443 |

TLS encrypted SMF traffic between the Mission Control Agent and the Home Cloud. For more information, see Information Exchanged Between the Home Cloud and the Mission Control Agent. |

| Datadog Agents to Datadog Servers |

|

There are multiple IP addresses that must be configured for both the Mission Control Agent and the event broker services. For the Mission Control Agent: You must configure the addresses directly to Datadog. See https://ip-ranges.datadoghq.com/ for information. For event broker services: This is required for monitoring traffic to the central monitoring service (Datadog). For details about the external IP addresses, see Getting the IP Addresses for Monitoring Traffic. |

443 |

Required for monitoring traffic and metrics. TLS encrypted traffic between each Datadog agent (one per Solace pod, including Mission Control Agent) and Datadog server. Note for the Mission Control Agent, you must configure the addresses directly. |

| Kubernetes to Solace Container Registry | gcr.io( storage.googleapis.com ) |

This is not a single fixed IP address but can be proxied. |

443 |

Required to download Solace's Container images. TLS encrypted traffic between each Kubernetes cluster and Note: You do not need to allow this host and port combination if you choose to configure an image repository in your data center to mirror Solace's Container Registry ( For more information, see the Solace Container Registry information in Connectivity Model for Kubernetes Deployments. |

| Mission Control Agent to Home Cloud | maas-secure-prod.s3.amazonaws.com

|

N/A |

443 |

Required to download the certificate files for the created event broker service. |

|

|

N/A |

443 |

This is a unique value for each private data center. Refer to the table of bucket names when deploying advanced event mesh. |

S3 Bucket Names for Gathered Diagnostics

As detailed in the Connection Requirements for SAP Integration Suite, Advanced Event Mesh Components table above, a host address to an Amazon S3 bucket is required for gathering diagnostics. Replace {bucket_name} in the ${bucket_Name}.s3.amazonaws.com string with the appropriate value from the S3 Bucket Name column in the table below. When selecting the S3 bucket, choose the one that is geographically closest to the region where your event broker services are being deployed.

| S3 Bucket Name | AWS Region |

|---|---|

| solace-gd-af-south-1 | Africa (Cape Town) – af-south-1 |

| solace-gd-ap-northeast-1 | Asia Pacific (Tokyo) – ap-northeast-1 |

| solace-gd-ap-northeast-2 | Asia Pacific (Seoul) – ap-northeast-2 |

| solace-gd-ap-northeast-3 | Asia Pacific (Osaka) – ap-northeast-3 |

| solace-gd-ap-south-1 | Asia Pacific (Mumbai) – ap-south-1 |

| solace-gd-ap-southeast-1 | Asia Pacific (Singapore) – ap-southeast-1 |

| solace-gd-ap-southeast-2 | Asia Pacific (Sydney) – ap-southeast-2 |

| solace-gd-ca-central-1 | Canada (Central) – ca-central-1 |

| solace-gd-eu-central-1 | EU (Frankfurt) – eu-central-1 |

| solace-gd-eu-north-1 | EU (Stockholm) – eu-north-1 |

| solace-gd-eu-west-1 | EU (Ireland) – eu-west-1 |

| solace-gd-eu-west-2 | EU (London) – eu-west-2 |

| solace-gd-eu-west-3 | EU (Paris) – eu-west-3 |

| solace-gd-us-east-1 | US East (N. Virginia) – us-east-1 |

| solace-gd-us-east-2 | US East (Ohio) – us-east-2 |

| solace-gd-us-west-1 | US West (N. California) – us-west-1 |

| solace-gd-us-west-2 | US West (Oregon) – us-west-2 |

Connectivity for the Monitoring of Event Broker Services

Datadog connectivity is required for the monitoring of event broker services and if you plan to use Insights.

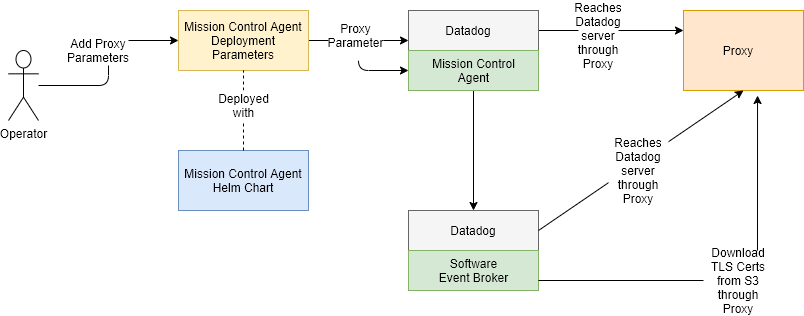

As shown in the diagram below, a proxy parameter (containing the proxy server to use) is added to the Helm chart (in the values.yaml file). When the Mission Control Agent is installed, its Datadog's sidecar container is created and configured to use that proxy server. The Mission Control Agent also receives the proxy server parameters via its properties files. Because the Mission Control Agent has this parameter configured, it configures every event broker service it launches to use the proxy server as well:

- The Datadog sidecar containers in each software event broker pod are configured to use the proxy server.

- The

init.shentry point script in each software event broker pod is configured to download certificates via the proxy server.

The Mission Control Agent gets its properties files from a ConfigMap instead of from the Solution-Config-Server.

Management Connectivity for Event Broker Services

Your network must permit management traffic to allow the administration of your event broker services, for example, to configure system-wide features such as clustering and guaranteed messaging. Management traffic can flow:

- over SSH to the Solace CLI

- from a browser to Broker Manager

- from applications to the event broker services using SEMP

For more information about the protocols and ports, see Protocol and Port Client Applications for Management.

You must have connectivity to the same network as the event broker services to access Broker Manager. Access to Broker Manager is not possible if you're connecting from the public Internet if:

- the services do not have access to the public Internet (for example in a VPC/VNET) or do not have public endpoints

- only private endpoints are configured on the service

For more information, see Broker Manager.

You may need to configure your network to allow the ports used by management traffic. For details about the port configuration of event broker services, see Configuring Client and Management Ports.

Messaging Connectivity for Outbound Connections and Client Applications

You must configure your network to allow outbound connections initiated by event broker service and client applications. Although this connectivity is not required during deployment, we recommend that you configure it because:

- Much of your network connectivity architecture is being defined during deployment

- You can define all the necessary ports at one time with your security team.

You should ensure that your network architecture takes into account the following types of connectivity:

- Connectivity to permit messaging traffic between event broker services and client applications external to the Kubernetes cluster

- The messaging traffic includes various messaging patterns (point-to-point, publish-subscribe, request-reply) for event messaging. The ports used depend on the protocol and typically go through a public load balancer for external clients. For example, client applications that connect to event broker services to publish and subscribe to event data would use this type of traffic. For more information, see Connectivity Between Event Broker Services and Client Applications.

- If you are using a load balancer to expose your event broker services to external client applications, SAP recommends setting the

externalTrafficPolicytolocal. For more information see our traffic policy recommendation in Using an Integrated Load Balancer Solution. - Messaging client applications can connect from other VPCs/VNets or from the public Internet. Alternatively, you may decide not to permit clients from external IP address to connect, for example if your messaging client applications reside in the same Kubernetes cluster. For details about these options, see Exposing Event Broker Services to External Traffic.

- Connectivity for outbound traffic from event broker services to hosts outside the Kubernetes cluster

- Outbound traffic to external hosts refers to where the connection is initiated by the event broker services. For private networks that require outbound traffic to external hosts, customers must use a NAT configured with a public-facing, static IP address.

- For more details on the networking architecture, see using Using NAT with Static IP Addresses for Outbound Connections for Kubernetes-Based Deployments.

- For information about outbound connections, see Outbound Connections Initiated by Event Broker Services .