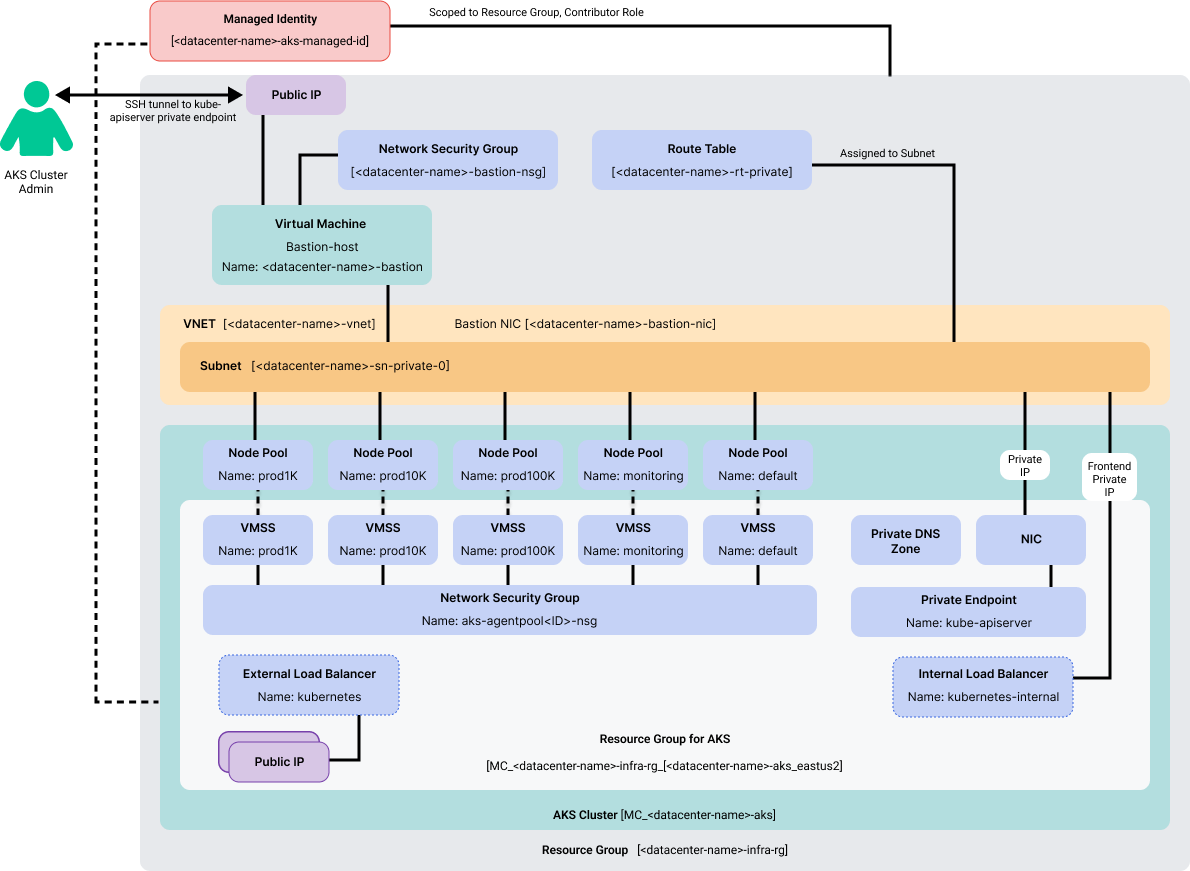

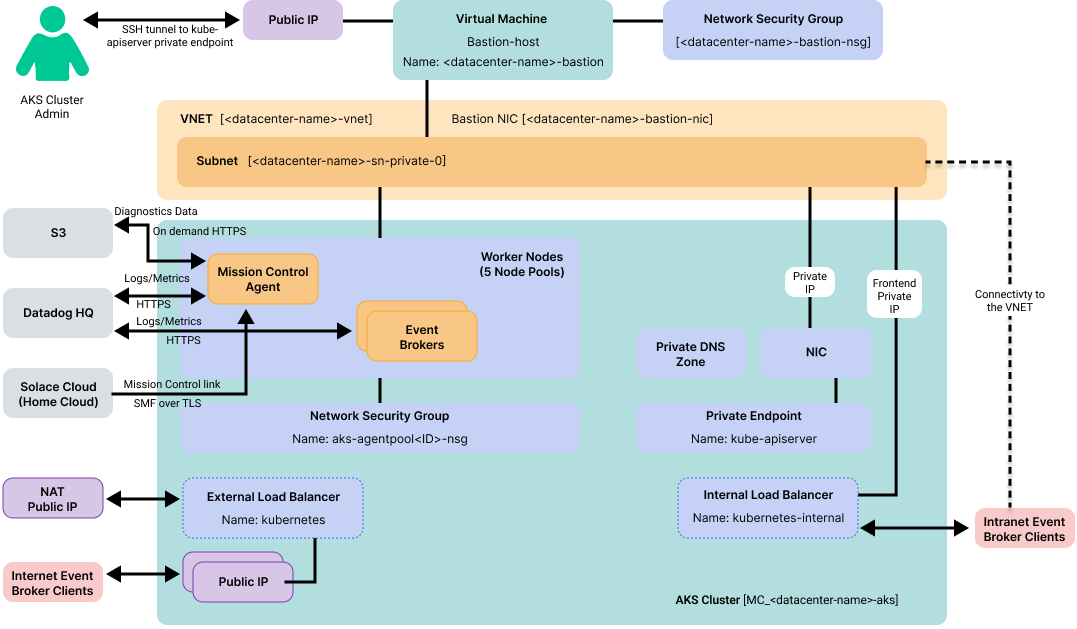

The following diagrams and component descriptions describe a typical Advanced event mesh for SAP Integration Suite deployment in Azure Kubernetes Service (AKS).

AKS Deployment

Azure Network Connectivity

This diagram shows the network connections for the various components.

| Component | Description |

|---|---|

|

Managed Identity |

|

|

Resource Group |

Name: <datacenter-name>-infra-rg |

|

Route Table |

|

|

VNET |

|

|

Bastion Host |

|

|

AKS Cluster |

|

|

Default Node Pool |

|

|

Prod1k Node Pool |

|

|

Prod10k Node Pool |

|

|

Prod100k Node Pool |

|

|

Monitoring Node Pool |

|

|

Resource Group (Managed by AKS) |

|

|

Public Load Balancer |

|

|

Public IP Address for load balancer outgoing rules |

|

|

Public IP Address for broker public access and load balancer ingress rules |

|

|

Internal Load Balancer |

|

|

Network Security Group |

|

|

Network Interface |

|

|

Private Endpoint |

|

|

Private DNS Zone |

|

|

Virtual Machine Scale Set (Default Node Pool) |

|

|

Virtual Machine Scale Set (Prod1k Node Pool) |

|

|

Virtual Machine Scale Set (Prod10k NodePool) |

|

|

Virtual Machine Scale Set (Prod100k Node Pool) |

|

|

Virtual Machine Scale Set (Monitoring Node Pool) |

|

For high-availability event broker services, each node pool hosting an event broker service must be locked to a single availability zone. This allows the cluster autoscaler to function properly. SAP uses pod anti-affinity against the node pools' zone label to ensure that each pod in a high-availability event broker service is in a separate availability zone. See Node Pool Requirements for more information.